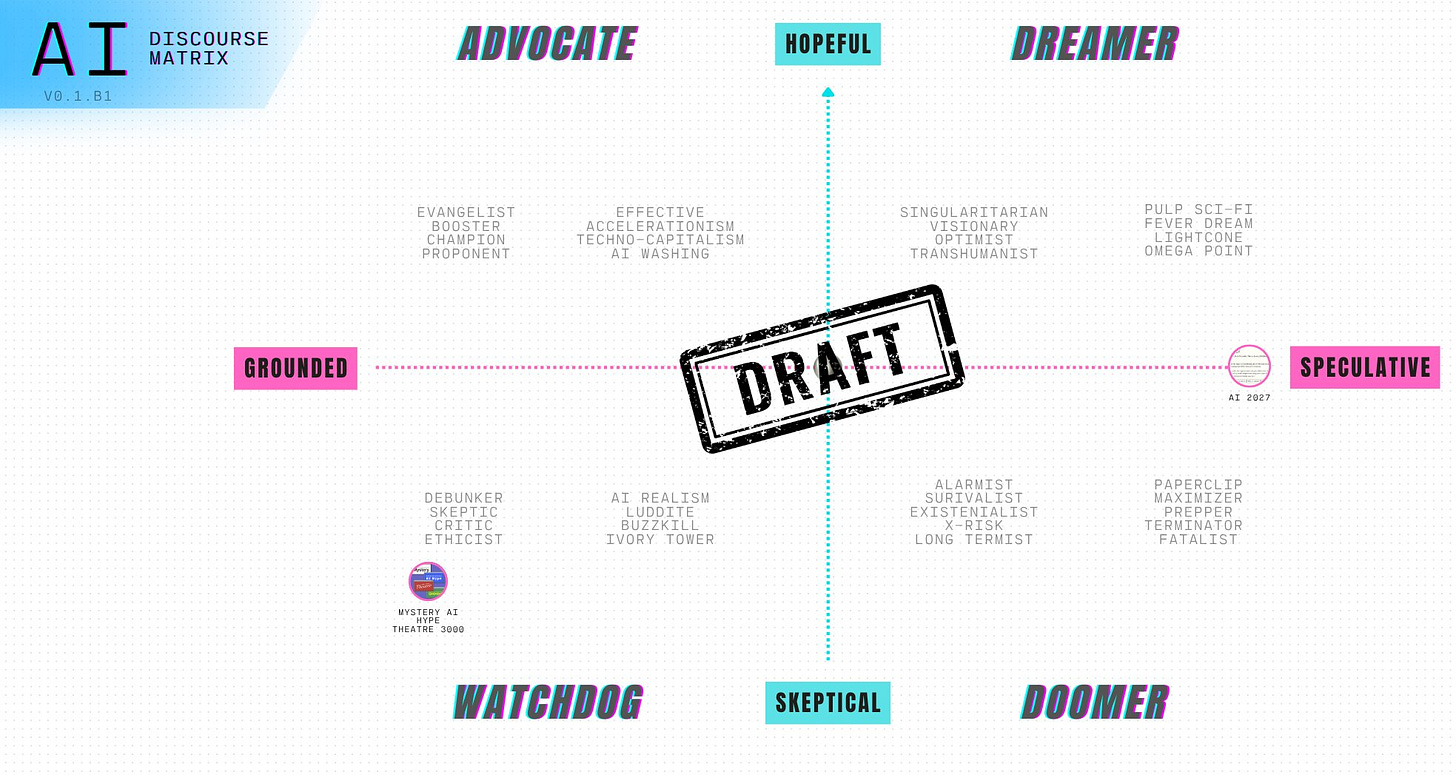

Above is a beta version of a quadrant/matrix like graph I’m working on. I’ve used this kind of matrix previously in my posts on the "AI Hot Take Matrix” and I’m continuing to build on the model here, in some kind of hope to manage all the thinking around AI and what it means.

Fever Dream

The potential capabilities of our AI advancements are difficult to understand. Are large language models (LLMs) ineffective and a waste of time? Will the stock market crash when we realise that AI is not as effective as we thought? Or will they work so well that everyone will either lose their jobs or enjoy a universal basic income? Can you “smell the AGI”, and will we become transhumanists with uploaded minds? The problem is that anything seems possible right now. Possible, though not necessarily plausible or realistic.

With so much at stake, it's a challenging situation. On the one hand, AI is still hilariously bad at some things. It can't really 'think' or 'reason', but it can write code, generate text and produce reasonable images. On the other hand, it can innovate and invent: for example, AlphaZero's mastery of chess and the famous move 37 in Go. It may also be able to create new and better algorithms and discover new drugs. Not all of us can read the papers coming out of advanced AI labs and form accurate opinions on them.

During the games, AlphaGo played several inventive winning moves. In game two, it played Move 37 — a move that had a 1 in 10,000 chance of being used. This pivotal and creative move helped AlphaGo win the game and upended centuries of traditional wisdom. - Deepmind

These results are impressive: given 50 open math problems, the AI rediscovered the leading approach 75% of the time & improved on it 20% of the time - Ethan Mollick on AlphaEvolve

If you are a sci-fi fan, it’s easy to get lost in the fog of AI possibilities. Nevertheless, we simply don’t know what it is capable of, we can only speculate, and this uncertainty poses major economic, social and political problems.

Therefore, I think, it’s good to take a step back and pay attention to what is being said and where it lies on the matrix. The discourse is fascinating on its own!

AI Discourse Matrix

Quadrants

Quadrant 1: Advocate

Optimistic about AI but focused on practical, near-term applications and incremental progress. Values real-world implementation and business value over speculative futures.

Quadrant 2: Dreamer

Enthusiastically optimistic about AI's transformative potential, with focus on long-term, paradigm-shifting possibilities rather than current limitations.

Quadrant 3: Watchdog

Cautious or critical about AI development, with focus on rigorous assessment of current capabilities and limitations rather than speculative risks.

Quadrant 4: Doomer

Concerned about AI's long-term risks and existential implications, focusing on speculative scenarios involving superintelligence and alignment failures.

In the matrix, I use words that might sound derogatory, such as 'pulp sci-fi', 'fever dream', 'paperclip maximiser', 'prepper', 'Terminator scenario' and 'killjoy', to name a few. I hope that I don't use them carelessly; ultimately, I am really trying to understand the discourse, including the actual words used and their potential meanings. I use these words to demonstrate a spectrum ranging from positive to negative, because we must pay attention to the full range, difficult though that may be.

More to come as I work on this!

Example Content

Here are a couple of great examples of content that are certainly at odds with one another. One is grounded and sceptical. The other is quite speculative though surprisingly near-term (2027!), and discusses both dreamer and doomer scenarios. For me, these two pieces represent a loci of competing frameworks, and there is a gradient of discourse between these poles to be considered…somehow.

Can we enter into this discourse matrix and come out with something valuable?

Mystery AI Hype Theatre

AGI: "Imminent", "Inevitable", and Inane, 2025.04.21