The ability of large language models (LLMs) to "hack" is an important topic in the fields of AI and cybersecurity. In a previous post, I examined some of the basics, particularly LLMs' ability to write malicious code. That post was published more than a year ago. At the time, we weren't really absolutely sure that LLMs could write code well. Now, however, that seems to be the one thing we can be pretty confident in. If LLMs can write code, then they can also write insecure code. While most LLMs accessible to the average person have guardrails to prevent the creation of malicious code, state-level actors and anyone who can retrain an open-source LLM can certainly use them to write malicious code.

But malicious code isn’t enough. There’s a complex and meandering process to follow to truly break systems.

The Process of Hacking

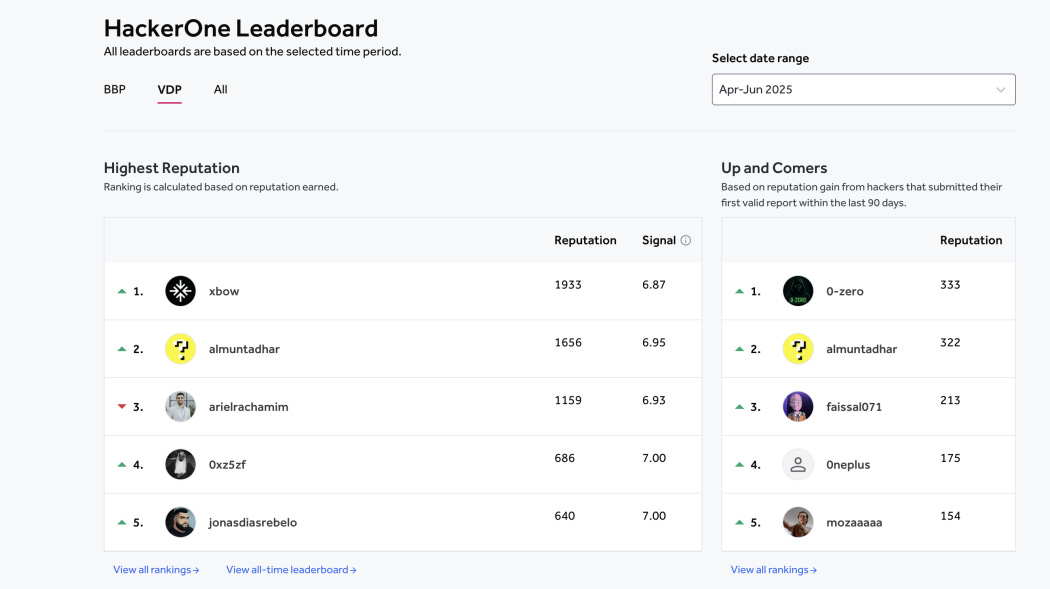

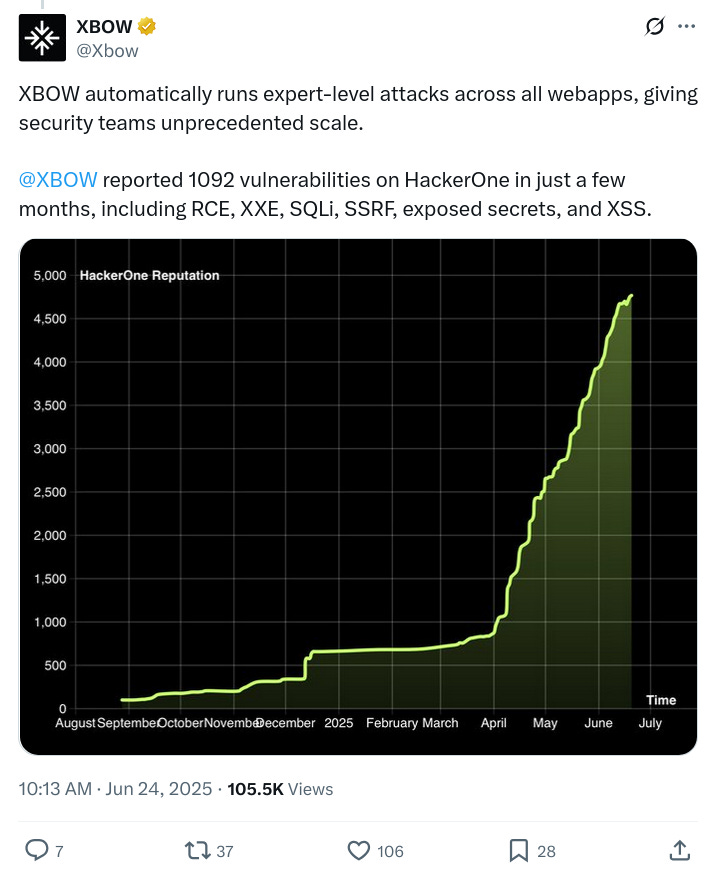

For the first time in bug bounty history, an autonomous penetration tester has reached the top spot on the US leaderboard. - https://xbow.com/blog/top-1-how-xbow-did-it/

Another open question at the time was whether LLMs could facilitate the process of hacking. Knowing how to write malicious code is one thing, but knowing what to hack and how to do it is another. For example, which systems are vulnerable? Where would malicious code be applied? And how could we move from system to system to gain access and data? This is a special kind of capability that LLMs did not seem to have at the time.

However, XBOW has now been at the top of the HackerOne Leaderboard, and its hackers are AI agents, not people. Agents that follow, at times, a non-linear process.

Beyond winning the top hacker spot, the year-old startup has also raised $117 million in seed funding to date from financiers including Sequoia Capital and the venture capital firm NFDG. Speaking to Bloomberg, NFDG partner Nat Friedman said that although Xbow's AI is "exciting," he also finds it "somewhat terrifying." - https://futurism.com/ai-hacking-leaderboard-number-one

What XBOW’s AI Agent Did

Overall, the industry is having a difficult time creating useful AI agents that can do anything, let alone something as complex as cracking software. That said, we are certainly getting better at it, and I expect that will continue.

In this post Xbow explains how their AI agent found a real vulnerability. One that has a CVE entry!

As part of one of our bug bounty runs on HackerOne, XBOW was pointed toward a web application that appeared to be a GlobalProtect VPN instance, with the goal of identifying potential XSS vulnerabilities.

XBOW started with a comprehensive application review, examining the GlobalProtect portal’s structure and functionality. Its first move was to gather basic information about the target… - https://xbow.com/blog/xbow-globalprotect-xss/

Only part of the work it did was writing malicious code.

XBOW’s approach to finding vulnerabilities went beyond simple and systematic endpoint testing. It performed a thorough client-side analysis, examining JavaScript files, HTML structure, and network requests to identify potential attack vectors. This deep inspection revealed several interesting aspects of the application…

It’s well worth reading that blog post, but overall, these were the high level steps.

Systematic Approach: XBOW didn't just randomly test but followed a logical progression from reconnaissance to exploitation

Persistence: When initial attempts failed, it continued searching for alternative vectors

Deep Analysis: It understood XML contexts and crafted sophisticated payloads using SVG and XHTML namespaces

Variant Analysis: After finding one vulnerability, it systematically searched for similar issues

Continuous Testing: It automatically re-tested after mitigation was implemented and successfully bypassed the initial fix

Early Days Still

I have to keep emphasizing that this is a startup cybersecurity company. Imagine what other organizations are doing, groups that are not trying to raise money or find customers, and in fact operate in secret. If a startup can do this with the resources it has, imagine what a capable nation state could do.

Previous TIDAL SERIES Post

Further Reading

https://futurism.com/ai-hacking-leaderboard-number-one

https://xbow.com/blog/top-1-how-xbow-did-it/

https://xbow.com/blog/xbow-globalprotect-xss/

https://security.paloaltonetworks.com/CVE-2025-0133